Shining a light on Caustics with Shaders and React Three Fiber

Since my work on refraction and chromatic dispersion from early 2023, I have not ceased to experiment with light effects and shaders, always trying to strike the right balance between realism, aesthetics, and performance. However, there's one light effect that I was eager to rebuild this entire time: Caustics.

Those beautiful swirls of light can be visible when light rays travel through a transmissive or transparent curved surface, such as a glass of water or the surface of a shallow lake, and converge on a surface after being refracted. I've been obsessing with Caustics since day one of working with shaders (ask @pixelbeat, he'll tell you). I saw countless examples reproducing the effect on Blender, Redshift, or WebGL/WebGPU, each one of them making me more keen to build my own implementation to fully understand how to render them for my React Three Fiber projects.

I not only wanted to rebuild a Caustic effect with my shader knowledge from scratch, but I also wanted to reproduce one that was both real-time and somewhat physically based while also working with a diverse set of geometries. After working heads down, step-by-step, for a few weeks, I reached this goal and got some very satisfying results 🎉

While I documented my progress on Twitter showcasing all the steps and my train of thought going through this project, I wanted to dedicate a blog post to truly shine a light on caustics (🥁) by walking you through the details of the inner workings behind this effect. You'll see in this article how, by leveraging normals, render targets, and some math and shader code, you can render those beautiful and shiny swirls of light for your own creations.

In this first part, we'll look at the high-level concepts behind this project. To set the right expectations from the get-go: We're absolutely going to cheat our way through this. Indeed, if we wanted to reproduce Caustics with a high degree of accuracy, that project would probably fall into the domain of raytracing, which would be:

- Way out of reach given my current skill set as of writing this article.

- Very resource-intensive for the average computer out there, especially as we'd want most people to be able to see our work.

Thus, I opted for a simpler yet still somewhat physically based approach for this project:

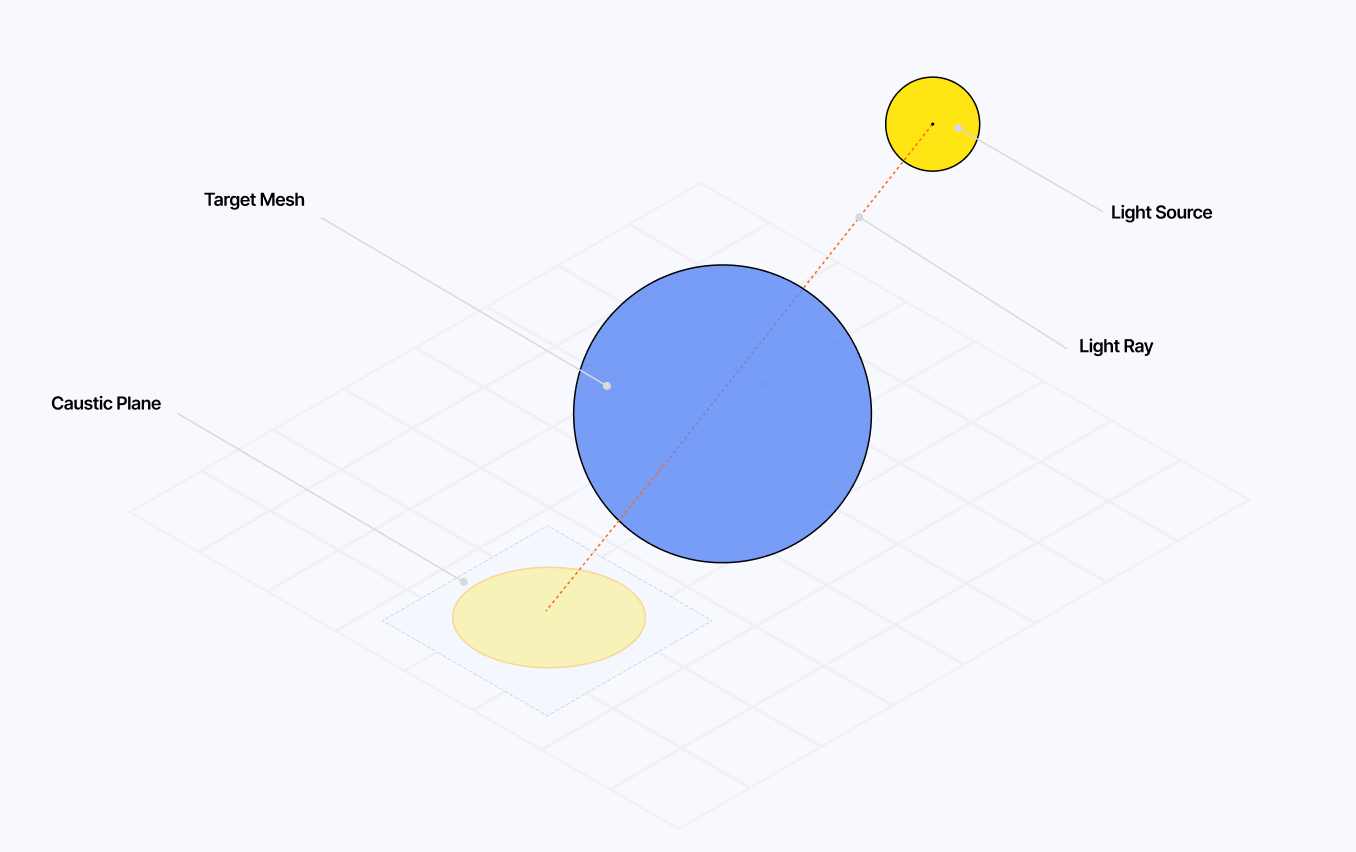

- We'll simulate in a fragment shader the refracted rays from a light source going through a target mesh.

- We'll render the resulting pattern in a caustic plane which we'll then scale and position accordingly based on the position of the light source in relation to our object.

Simulating how the caustic pattern works can seem quite tricky at first. However, if we look back at the definition established in the introduction, we can get hints for how to proceed. The light pattern we're aiming to render originates from rays hitting a curved surface, which nudges us toward relying on the Normal data of our target mesh (i.e. the surface data). On top of that, based on some preliminary research, knowing whether our rays of light converge or diverge after hitting our surface will determine the final look of our caustics.

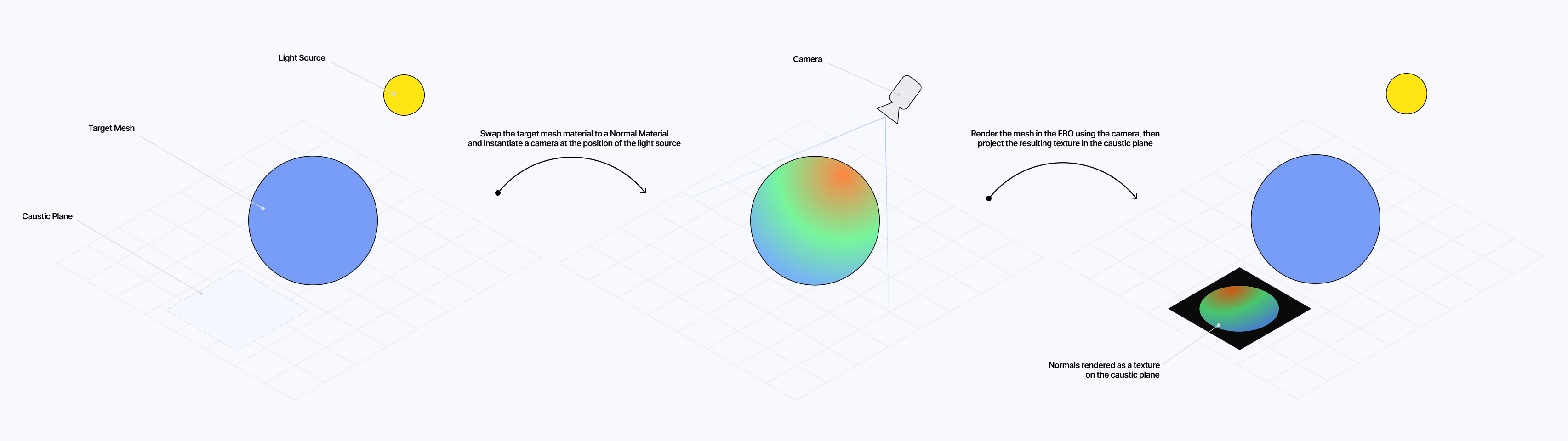

Let's take a stab at extracting the Normal data of our target mesh! With it, we'll know the overall "shape" of our mesh which influences the final look of our caustics. Since we'll need to read that data down the line in a shader to simulate our Caustic effect, we would want to have it available as a texture. That means it's time to dedust your good ol' render target skills because we'll need them here.

As always, we'll start by defining our render target, or Framer Buffer Object (FBO), using the useFBO hook provided by @react-three/drei: this is where we'll render our target mesh with a "normal" material and take a snapshot of it to have that data available as a texture later on.

Instantiating our normalRenderTarget in our Caustics scene

1const Caustics = () => {2const mesh = useRef();3const causticsPlane = useRef();45const normalRenderTarget = useFBO(2000, 2000, {});67useFrame((state) => {8const { gl } = state;9// ...10});1112return (13<>14<mesh ref={mesh} position={[0, 6.5, 0]}>15<torusKnotGeometry args={[10, 3, 16, 100]} />16<MeshTransmissionMaterial backside {...rest} />17</mesh>18<mesh19ref={causticsPlane}20rotation={[-Math.PI / 2, 0, 0]}21position={[5, 0, 5]}22>23<planeGeometry />24<meshBasicMaterial />25</mesh>26</>27);28};

We'll also need a dedicated camera for our render target, which I intuitively placed where our light source would be since it will get us a view of the normals our light rays will interact with. That camera will point towards the center of the bounds of our target mesh using the lookAt function.

Setting up a dedicated camera for our render target

1const light = new THREE.Vector3(-10, 13, -10);23const normalRenderTarget = useFBO(2000, 2000, {});45const [normalCamera] = useState(6() => new THREE.PerspectiveCamera(65, 1, 0.1, 1000)7);89useFrame((state) => {10const { gl } = state;1112const bounds = new THREE.Box3().setFromObject(mesh.current, true);1314normalCamera.position.set(light.x, light.y, light.z);15normalCamera.lookAt(16bounds.getCenter(new THREE.Vector3(0, 0, 0)).x,17bounds.getCenter(new THREE.Vector3(0, 0, 0)).y,18bounds.getCenter(new THREE.Vector3(0, 0, 0)).z19);20normalCamera.up = new THREE.Vector3(0, 1, 0);2122//...23});

We now have all the elements to capture our Normal data and project it onto the "caustic plane":

- In our

useFramehook, we first swap the material of our target mesh with a material that renders the normals of our mesh. In this case, I used a customshaderMaterial(optional, but gives us more flexibility as you'll see in the next part), but you can also usenormalMaterial.

1// Custom Normal Material2const [normalMaterial] = useState(() => new NormalMaterial());34useFrame(() => {5const originalMaterial = mesh.current.material;67mesh.current.material = normalMaterial;8mesh.current.material.side = THREE.BackSide;9});

- Then, we take a snapshot of our mesh by rendering it in our render target.

1gl.setRenderTarget(normalRenderTarget);2gl.render(mesh.current, normalCamera);

- Finally, we can restore the original material of our mesh and pass the resulting texture in the

mapproperty of our temporary caustic plane material, allowing us to visualize the output.

1mesh.current.material = originalMaterial;23causticsPlane.current.material.map = normalRenderTarget.texture;45gl.setRenderTarget(null);

With this small render pipeline, we should be able to see our Normal data visible on our "caustic plane" thanks to the texture data obtained through our render target. This will serve as the foundations of our Caustic effect!

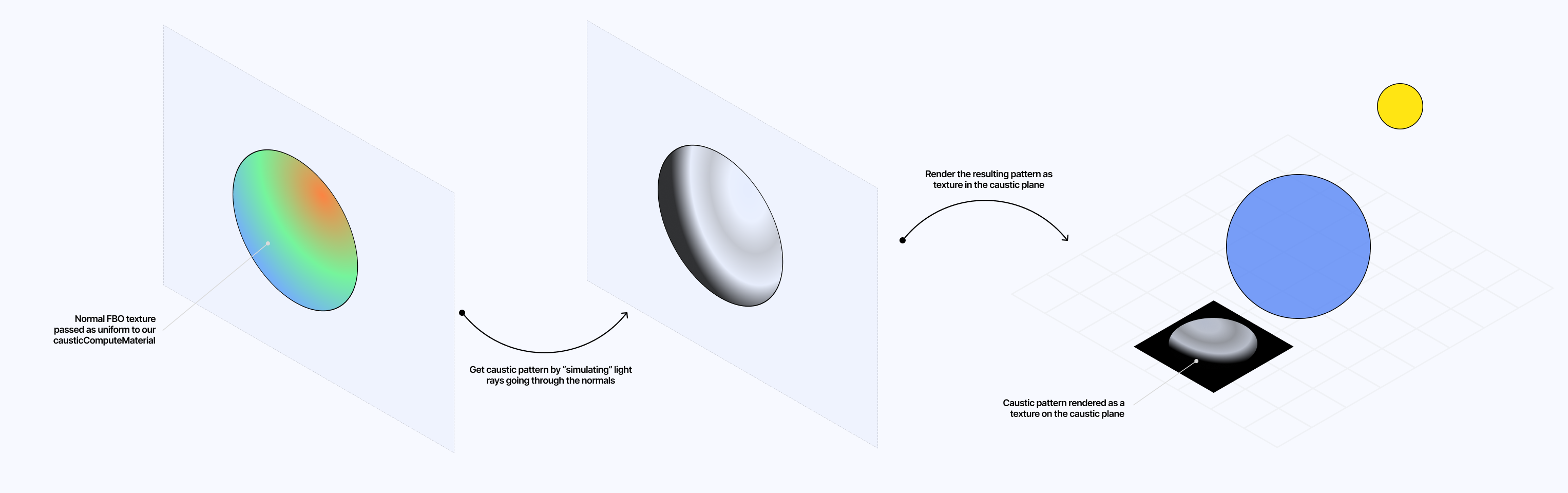

With what we just accomplished, we have, through our FBO, a texture representing the normals of our target mesh. Having that data as a texture is very versatile because not only can we render it as we just did, but more importantly we can pass it to other shaders to do some computation.

Which is exactly what we're going to do in this part!

We will take our Normal data and simulate light rays going through those normals and then interpret the output to create our caustics pattern.

Calculating caustics intensity

At first, I didn't know how to use my Normal data to obtain the desired effect as an output. I tried my luck with using a weird mix of sin functions in the fragment shader of my caustic plane, but that didn't yield something even remotely close to what I wanted to achieve:

On top of that, I also had this idea for my Caustics effect to be able to take on additional effects such as chromatic aberration or blur, as I really wanted the output not to be too sharp to look as natural as possible. Hence, I could not directly render the pattern onto the final plane; instead, I'd have to use an intermediate mesh with a custom shader material to do all the necessary math and computation I needed. Then, that would allow me through yet another FBO to apply as many effects to the output as I wanted on the final caustics plane itself.

To do so, we can leverage a FullScreenQuad geometry that we will not render within our scene but instead instantiate on its own and use it within our useFrame hook.

Setting up our causticsComputeRenderTarget and FullScreenQuad

1const causticsComputeRenderTarget = useFBO(2000, 2000, {});2const [causticsQuad] = useState(() => new FullScreenQuad());

We then attach to it a custom shaderMaterial that will perform the following tasks:

- Calculate the refracted ray vector from our light source going through the surface of our mesh, represented here by the Normal texture we created in the first part.

- Apply to each vertex of the

FullScreenQuadmesh (passed as varyings to our fragment shader) the refracted ray vector. - Use partial derivatives along the

xandyaxes for the original and the refracted position. When multiplied, the result lets us approximate a small surface neighboring the original and refracted vertex. - Compare the resulting surfaces to determine the intensity of the caustics.

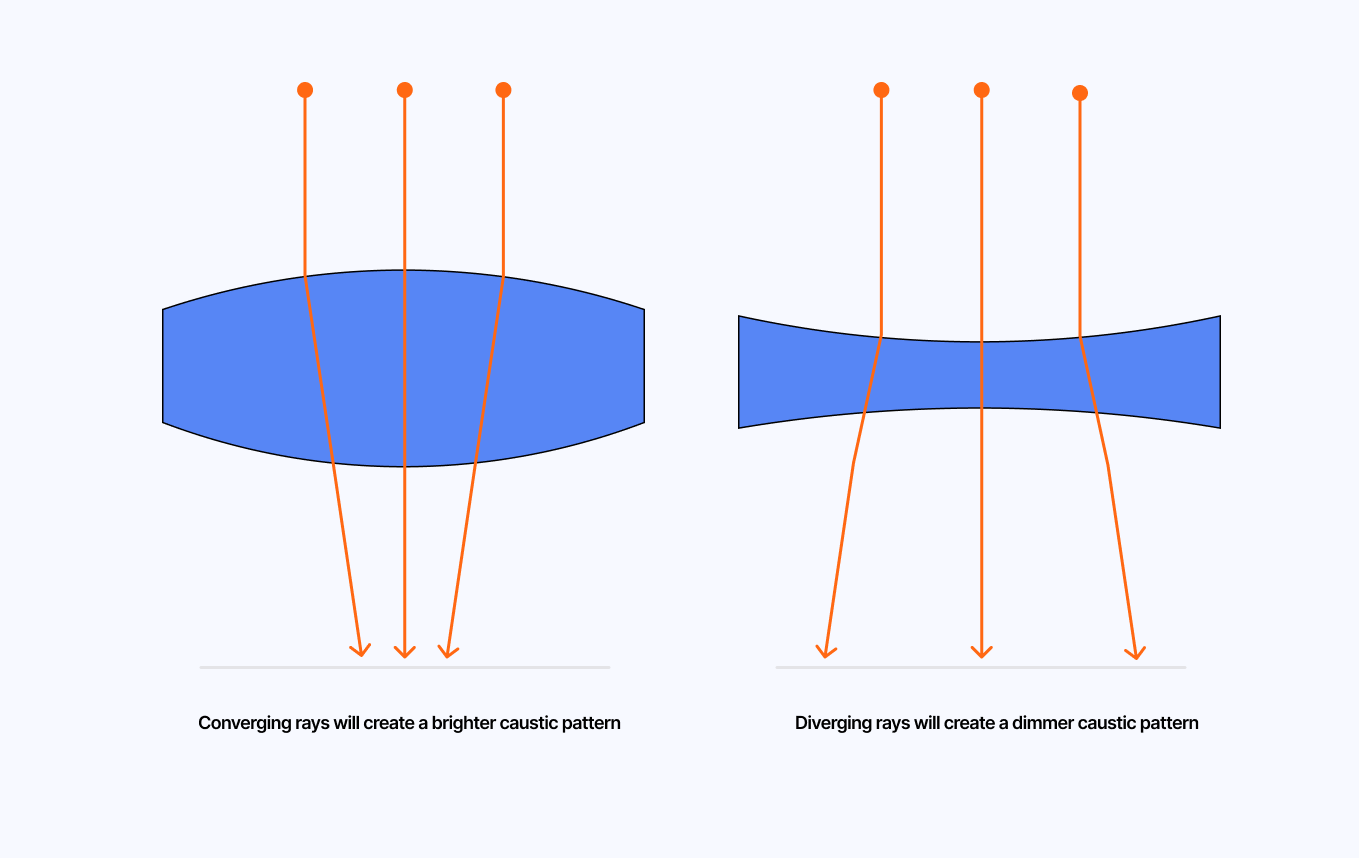

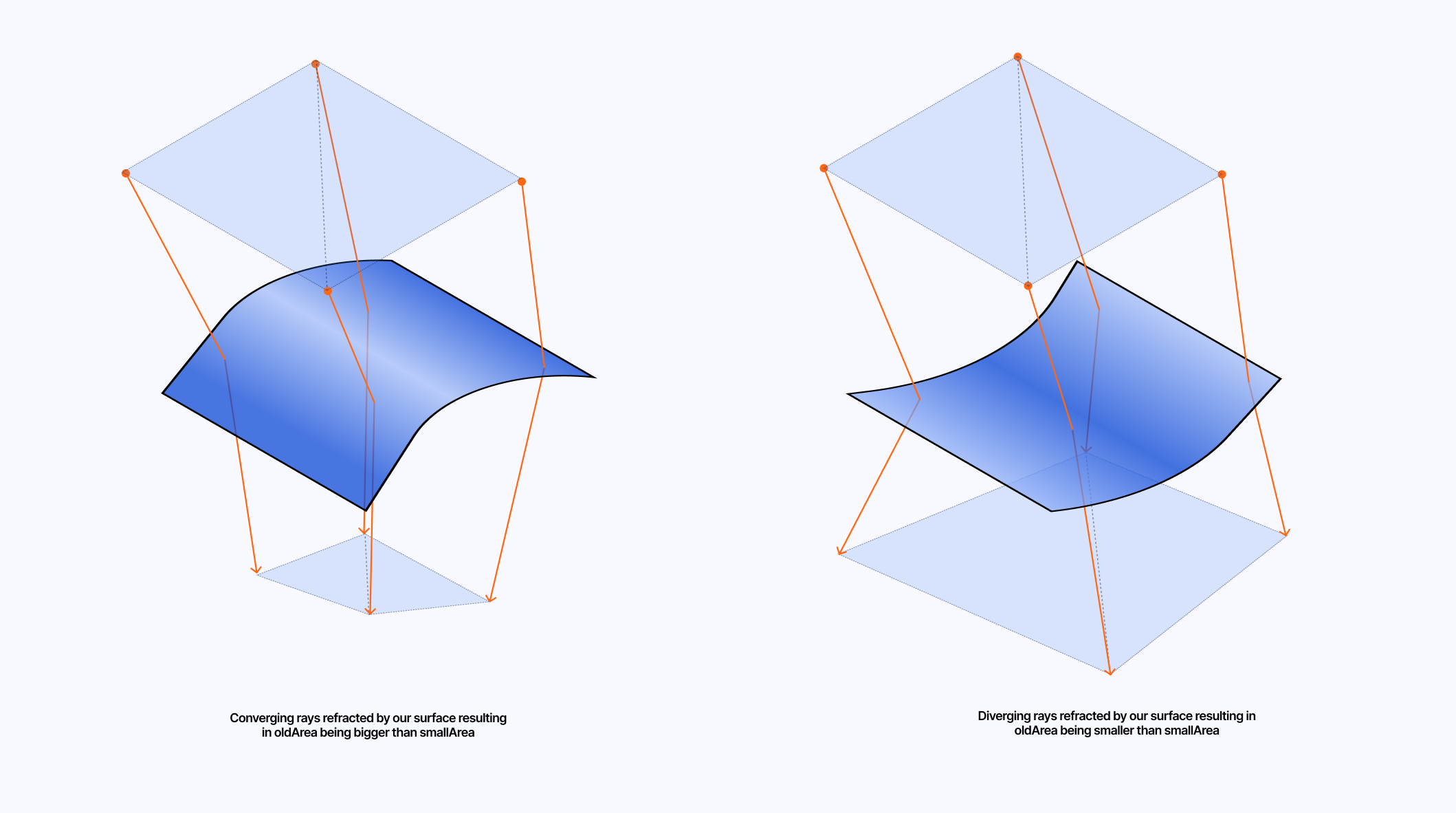

Obtaining those surfaces before and after refraction is the key to rendering our caustic pattern:

- A ratio

oldArea/newAreaabove1signifies our rays have converged. Thus, the caustic intensity should be higher. - On the other hand, a ratio

oldArea/newAreabelow1means that our rays have diverged and that our caustic intensity should be lower.

Below, you will find the corresponding fragment shader code that performs the steps we just highlighted:

CausticsComputeMaterial fragment shader

1uniform sampler2D uTexture;2uniform vec3 uLight;34varying vec2 vUv;5// Position of the vertex of the current fragment6varying vec3 vPosition;78void main() {9vec2 uv = vUv;1011vec3 normalTexture = texture2D(uTexture, uv).rgb;12vec3 normal = normalize(normalTexture);13vec3 lightDir = normalize(uLight);1415vec3 ray = refract(lightDir, normal, 1.0 / 1.25);1617vec3 newPos = vPosition.xyz + ray;18vec3 oldPos = vPosition.xyz;1920float lightArea = length(dFdx(oldPos)) * length(dFdy(oldPos));21float newLightArea = length(dFdx(newPos)) * length(dFdy(newPos));2223float value = lightArea / newLightArea;2425gl_FragColor = vec4(vec3(value), 1.0);26}

On top of that, I applied a few tweaks as I often do in my shader code. That is more subjective and enables me to reach what I originally had in mind for my Caustic effect, so take those edits with a grain of salt:

Extra tweaks to the final value from

1uniform sampler2D uTexture;2uniform vec3 uLight;3uniform float uIntensity;45varying vec2 vUv;6varying vec3 vPosition;78void main() {9vec2 uv = vUv;1011vec3 normalTexture = texture2D(uTexture, uv).rgb;12vec3 normal = normalize(normalTexture);13vec3 lightDir = normalize(uLight);1415vec3 ray = refract(lightDir, normal, 1.0 / 1.25);1617vec3 newPos = vPosition.xyz + ray;18vec3 oldPos = vPosition.xyz;1920float lightArea = length(dFdx(oldPos)) * length(dFdy(oldPos));21float newLightArea = length(dFdx(newPos)) * length(dFdy(newPos));2223float value = lightArea / newLightArea;24float scale = clamp(value, 0.0, 1.0) * uIntensity;25scale *= scale;2627gl_FragColor = vec4(vec3(scale), 1.0);28}

- I added a

uIntensityuniform so I could manually increase/decrease how bright the resulting caustic effect would render. - I made sure to

clampthe value between 0 and 1 (see warning below). - I squared the result to ensure the brighter areas get brighter and the dimmer areas get dimmer, thus allowing for a more striking light effect.

Finally, we can combine all that and assign what I dubbed the CausticsComputeMaterial to our FullScreenQuad and render it in a dedicated FBO.

Using the causticsComputeMaterial in our scene

1const [causticsComputeMaterial] = useState(() => new CausticsComputeMaterial());23useFrame((state) => {4const { gl } = state;56const bounds = new THREE.Box3().setFromObject(mesh.current, true);78normalCamera.position.set(light.x, light.y, light.z);9normalCamera.lookAt(10bounds.getCenter(new THREE.Vector3(0, 0, 0)).x,11bounds.getCenter(new THREE.Vector3(0, 0, 0)).y,12bounds.getCenter(new THREE.Vector3(0, 0, 0)).z13);14normalCamera.up = new THREE.Vector3(0, 1, 0);1516const originalMaterial = mesh.current.material;1718mesh.current.material = normalMaterial;19mesh.current.material.side = THREE.BackSide;2021gl.setRenderTarget(normalRenderTarget);22gl.render(mesh.current, normalCamera);2324mesh.current.material = originalMaterial;2526causticsQuad.material = causticsComputeMaterial;27causticsQuad.material.uniforms.uTexture.value = normalRenderTarget.texture;28causticsQuad.material.uniforms.uLight.value = light;29causticsQuad.material.uniforms.uIntensity.value = intensity;3031gl.setRenderTarget(causticsComputeRenderTarget);32causticsQuad.render(gl);3334causticsPlane.current.material.map = causticsComputeRenderTarget.texture;3536gl.setRenderTarget(null);37});

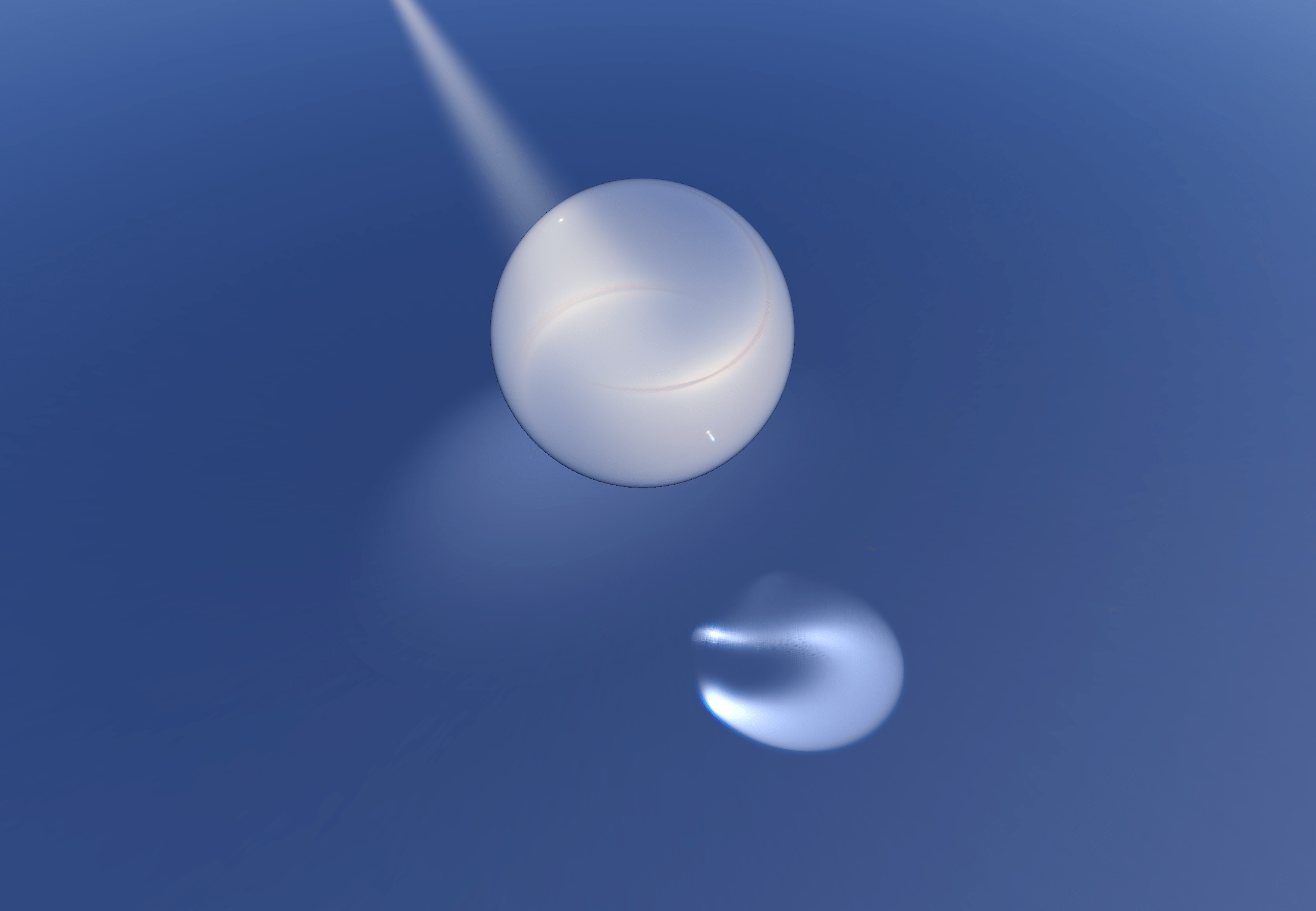

The resulting code lets us observe a glimpse of Caustics projected onto the ground ✨

Creating beautiful swirls of light

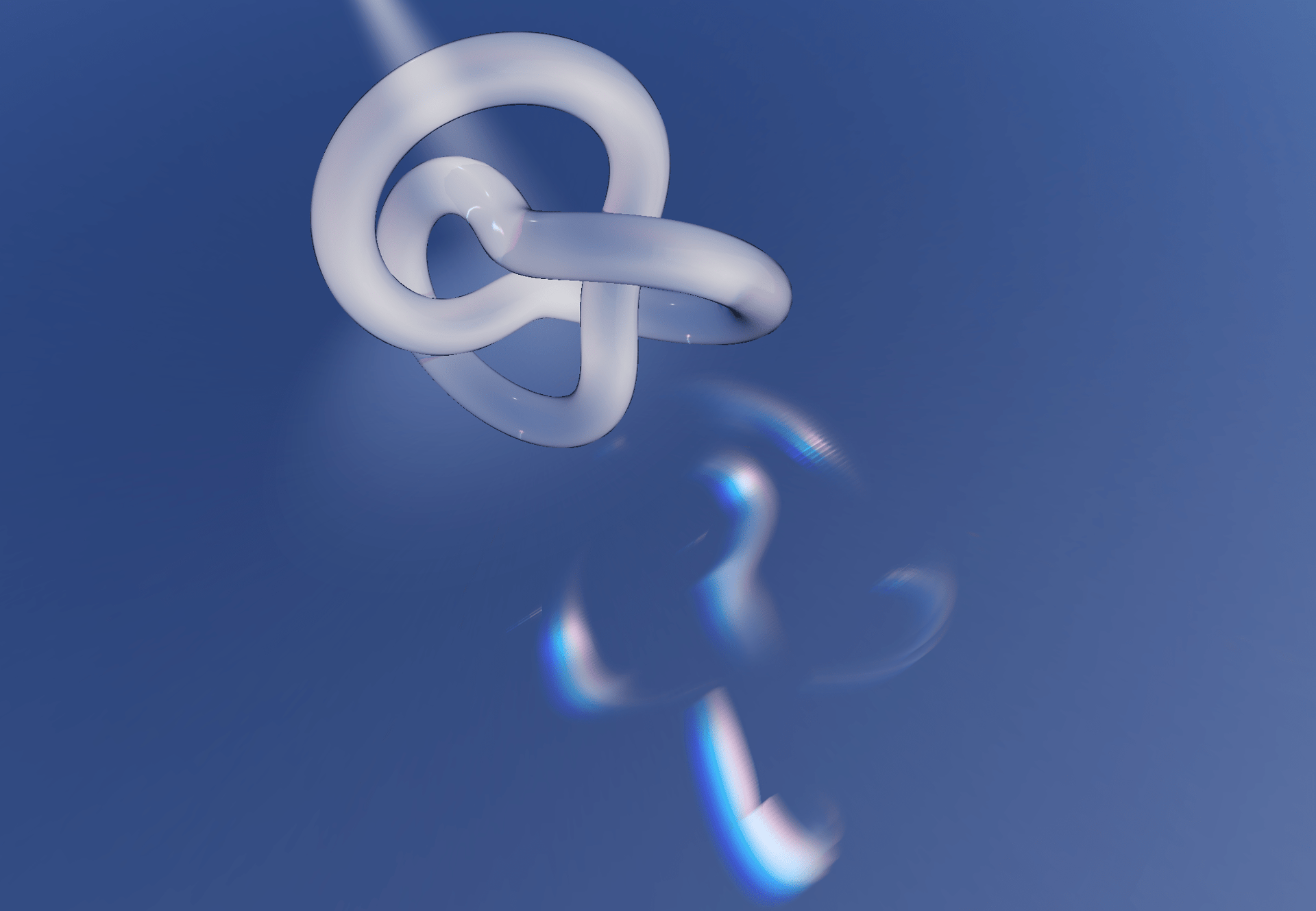

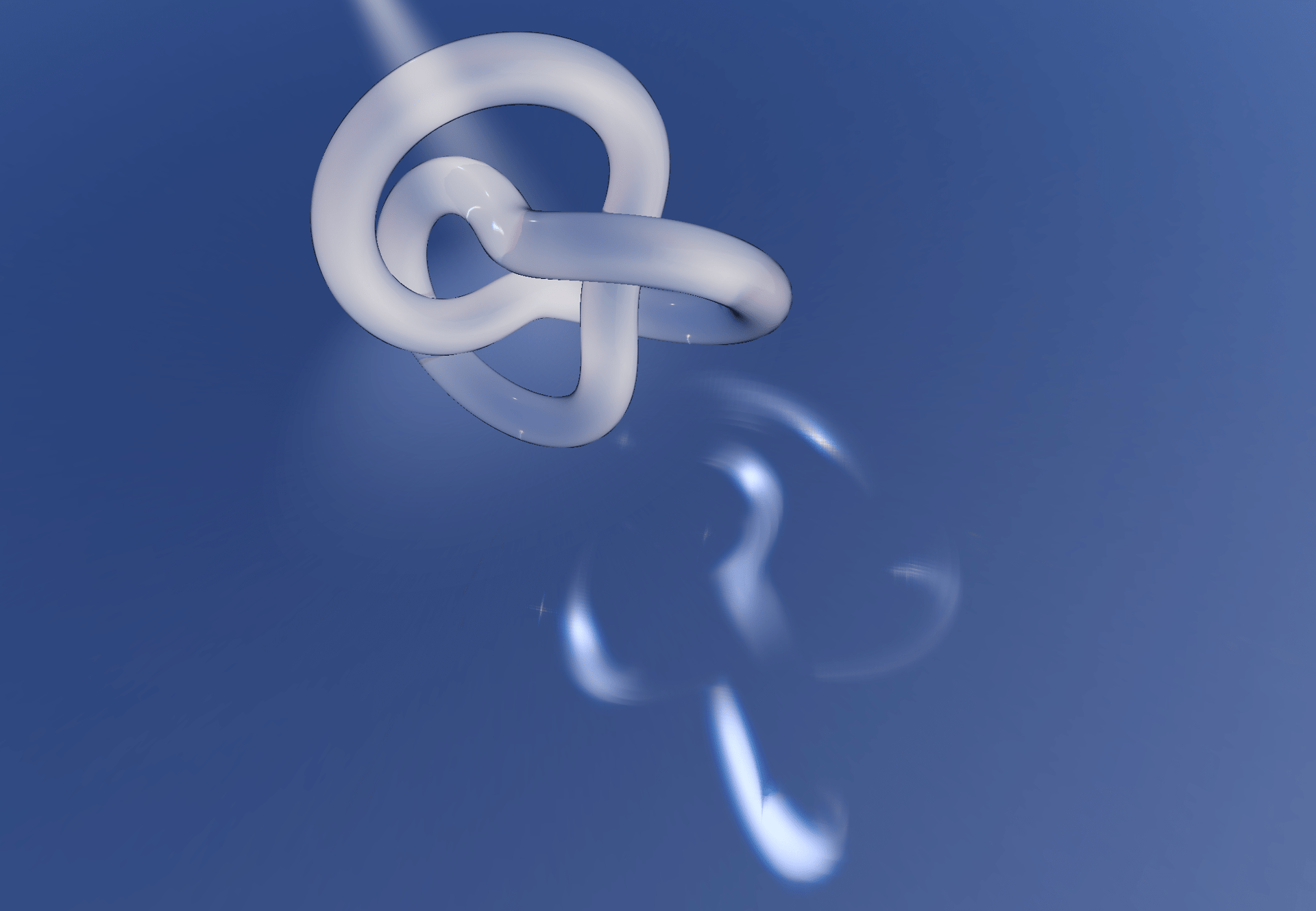

The result we just obtained looks great but presents a few subjective issues that are bothering me:

- It looks a bit too sharp to my taste, and because of that, we also see a lot of artifacts/grain in the final render (probably from the mesh not having enough vertices).

- The caustic plane does not blend with the ground: that black frame surrounding the pattern really has to go.

We can alleviate these issues by creating a final causticsPlaneMaterial that takes the texture we obtained from our causticsComputeRenderTarget and gently modifies it before rendering it on our plane.

I first decided to implement a chromatic aberration effect on top of our caustic effect. If you're familiar with some of my work around light effects, I'm a big fan of chromatic aberration, and when applied correctly, I think it really goes a long way to make your scene/mesh look gorgeous.

Refraction and Chromatic Aberration fragment shader

1uniform sampler2D uTexture;2uniform float uAberration;34varying vec2 vUv;56const int SAMPLES = 16;78float random(vec2 p){9return fract(sin(dot(p.xy ,vec2(12.9898,78.233))) * 43758.5453);10}1112vec3 sat(vec3 rgb, float adjustment) {13const vec3 W = vec3(0.2125, 0.7154, 0.0721);14vec3 intensity = vec3(dot(rgb, W));15return mix(intensity, rgb, adjustment);16}1718void main() {19vec2 uv = vUv;20vec4 color = vec4(0.0);2122vec3 refractCol = vec3(0.0);2324for ( int i = 0; i < SAMPLES; i ++ ) {25float noiseIntensity = 0.01;26float noise = random(uv) * noiseIntensity;27float slide = float(i) / float(SAMPLES) * 0.1 + noise;282930refractCol.r += texture2D(uTexture, uv + (uAberration * slide * 1.0) ).r;31refractCol.g += texture2D(uTexture, uv + (uAberration * slide * 2.0) ).g;32refractCol.b += texture2D(uTexture, uv + (uAberration * slide * 3.0) ).b;33}34// Divide by the number of layers to normalize colors (rgb values can be worth up to the value of SAMPLES)35refractCol /= float(SAMPLES);36refractCol = sat(refractCol, 1.265);3738color = vec4(refractCol.r, refractCol.g, refractCol.b, 1.0);3940gl_FragColor = vec4(color.rgb, 1.0);41}

While this shader worked as expected, it presented some issues: it created visible stripes as it moved each color channel of each texture fragment in the same direction. To work around this, I added code to flip the direction of the aberration through each loop to create some randomness.

Flipping the direction of the chromatic aberration

1float flip = -0.5;23for ( int i = 0; i < SAMPLES; i ++ ) {4float noiseIntensity = 0.01;5float noise = random(uv) * noiseIntensity;6float slide = float(i) / float(SAMPLES) * 0.1 + noise;78float mult = i % 2 == 0 ? 1.0 : -1.0;9flip *= mult;1011vec2 dir = i % 2 == 0 ? vec2(flip, 0.0) : vec2(0.0, flip);1213// Apply the color shift and refraction to each color channel (r,g,b) of the texture passed in uSceneTex;14refractCol.r += texture2D(uTexture, uv + (uAberration * slide * dir * 1.0) ).r;15refractCol.g += texture2D(uTexture, uv + (uAberration * slide * dir * 2.0) ).g;16refractCol.b += texture2D(uTexture, uv + (uAberration * slide * dir * 3.0) ).b;17}

Notice how this simple "flip" operation had multiple benefits:

- It solved the issue of the stripes that were degrading the quality of the output.

- It blurred the output, making our light patterns less sharp and more natural-looking.

That is what we precisely wanted! Although in some cases, if we look a bit closer, we can see some artifacts from the chromatic aberration, but from afar, it looks quite alright (at least it does to me 😅).

The last thing to tackle is to make our caustic plane blend with the surroundings. We can remove the black frame visible around our light patterns by setting a couple of blending options for our causticsPlaneMaterial after instantiating it:

Setting the proper blending option for our caustic plane to blend in

1const [causticsPlaneMaterial] = useState(() => new CausticsPlaneMaterial());2causticsPlaneMaterial.transparent = true;3causticsPlaneMaterial.blending = THREE.CustomBlending;4causticsPlaneMaterial.blendSrc = THREE.OneFactor;5causticsPlaneMaterial.blendDst = THREE.SrcAlphaFactor;

And just like that, the black frame is gone, and our caustic plane blends perfectly with its surroundings! You can see all the combined code in the code sandbox below 👇.

We now have a convincing caustic effect that creates a pattern of light based on the Normal data of the target mesh. However, if we move the position of our light in the demo we just saw above, the whole scene does not feel natural. That's because we still need to do some work to position and scale our caustic plane based on the position of that light source relative to our mesh.

To approach this problem, I first attempted to project the bounds of our target mesh on the ground. By knowing where on the ground the bounds of our mesh are, I could deduce

- The center of the bounds: the vector that we'll need to pass as the position of the caustics plane.

- The distance from the center to the furthest projected vertex, which we could pass as the scale of the caustics plane.

Doing this will make sure that the resulting size and position of the plane not only make sense but also fit our caustics pattern within its bounds.

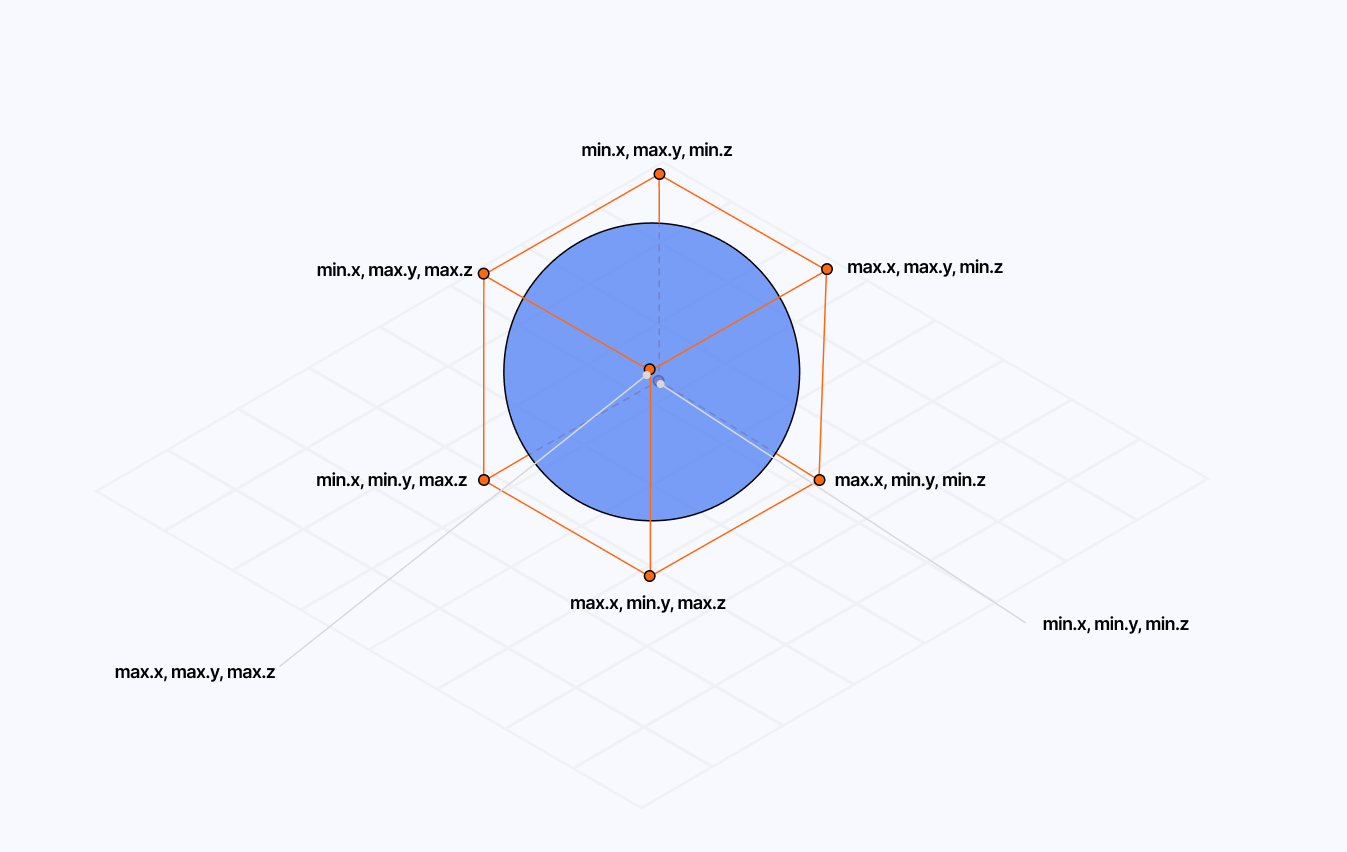

Building a "bounding cube" for our mesh

The first step consists of building a bounding cube around our mesh. We luckily did half the work already in the first part of this article when working on getting our Normal data using the following Three.js function:

1useFrame((state) => {2const { gl } = state;34const bounds = new THREE.Box3().setFromObject(mesh.current, true);56//...7});

The bounds variable contains a min and max field representing the coordinates of the minimum and maximum corners of the smallest cube containing our mesh. From there, we can extrapolate the remaining six corners/vertices of the bounding cube as follows:

Getting the bounds vertices of our target mesh

1useFrame((state) => {2const { gl } = state;34const bounds = new THREE.Box3().setFromObject(mesh.current, true);56let boundsVertices = [];7boundsVertices.push(8new THREE.Vector3(bounds.min.x, bounds.min.y, bounds.min.z)9);10boundsVertices.push(11new THREE.Vector3(bounds.min.x, bounds.min.y, bounds.max.z)12);13boundsVertices.push(14new THREE.Vector3(bounds.min.x, bounds.max.y, bounds.min.z)15);16boundsVertices.push(17new THREE.Vector3(bounds.min.x, bounds.max.y, bounds.max.z)18);19boundsVertices.push(20new THREE.Vector3(bounds.max.x, bounds.min.y, bounds.min.z)21);22boundsVertices.push(23new THREE.Vector3(bounds.max.x, bounds.min.y, bounds.max.z)24);25boundsVertices.push(26new THREE.Vector3(bounds.max.x, bounds.max.y, bounds.min.z)27);28boundsVertices.push(29new THREE.Vector3(bounds.max.x, bounds.max.y, bounds.max.z)30);3132//...33});

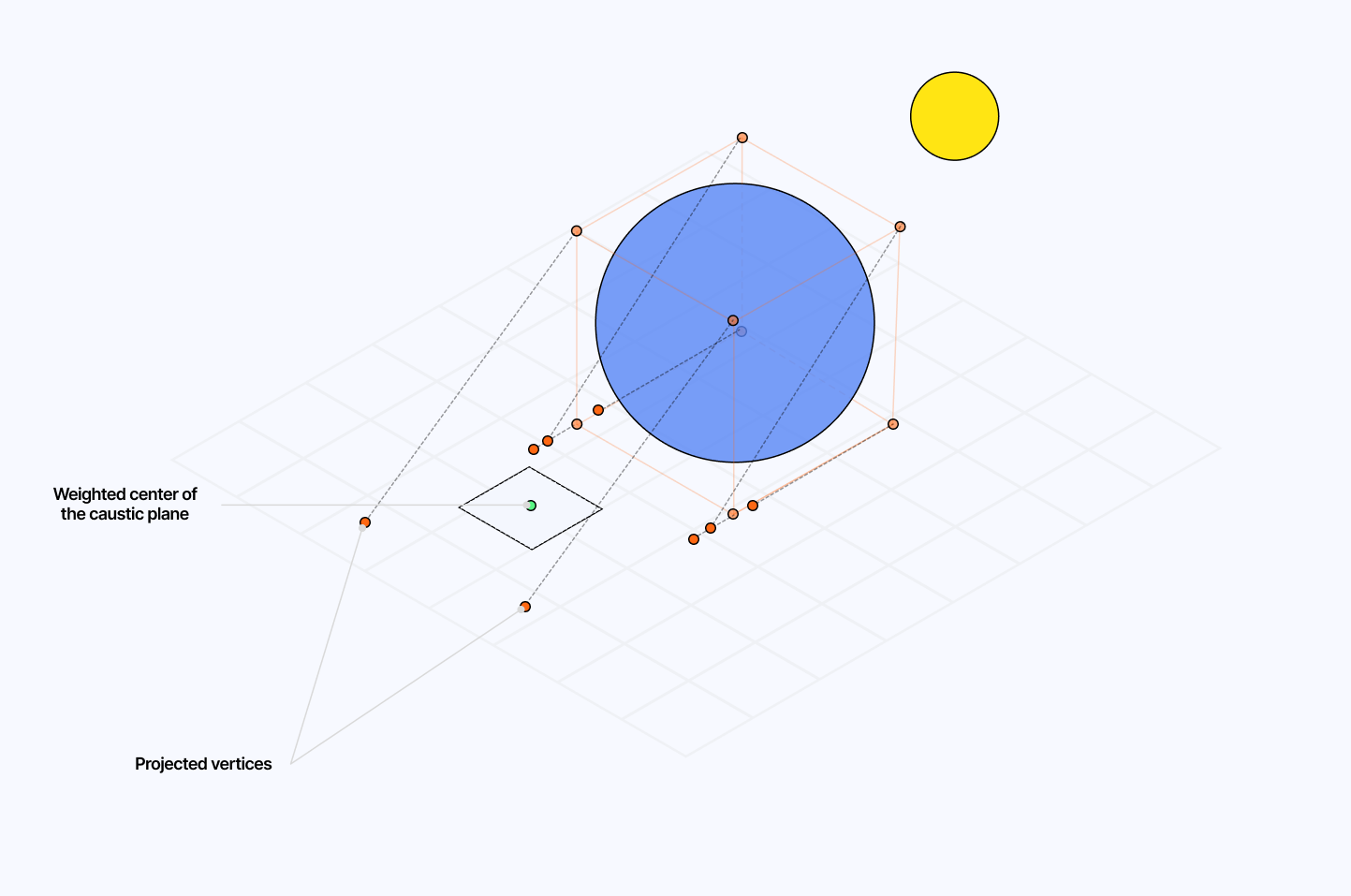

Projecting the vertices of the bounding cube and positioning our plane

Here, we want to use the vertices of our bounding cube and calculate their projected coordinates in the direction of the light to intersect with the ground.

The generalized formula for such projection looks as follows:

projectedVertex = vertex + lightDir * ((planeY - vertex.y) / lightDir.y)

If we transpose that formula to our code and consider our planeY value to be 0, since we're aiming to project on the ground, we get the following code:

Projected bounding box vertices

1const lightDir = new THREE.Vector3(light.x, light.y, light.z).normalize();23// Calculates the projected coordinates of the vertices onto the plane4// perpendicular to the light direction5const newVertices = boundsVertices.map((v) => {6const newX = v.x + lightDir.x * (-v.y / lightDir.y);7const newY = v.y + lightDir.y * (-v.y / lightDir.y);8const newZ = v.z + lightDir.z * (-v.y / lightDir.y);910return new THREE.Vector3(newX, newY, newZ);11});

By leveraging the projected vertices, we can now obtain the center position by combining those coordinates and dividing them by the total number of vertices, i.e., just doing a weighted average of all coordinates.

We can then assign that center coordinate as the position vector of our plane, which translates to the following code:

Calculating the weighted center of our caustic plane

1const centerPos = newVertices2.reduce((a, b) => a.add(b), new THREE.Vector3(0, 0, 0))3.divideScalar(newVertices.length);45causticsPlane.current.position.set(centerPos.x, centerPos.y, centerPos.z);

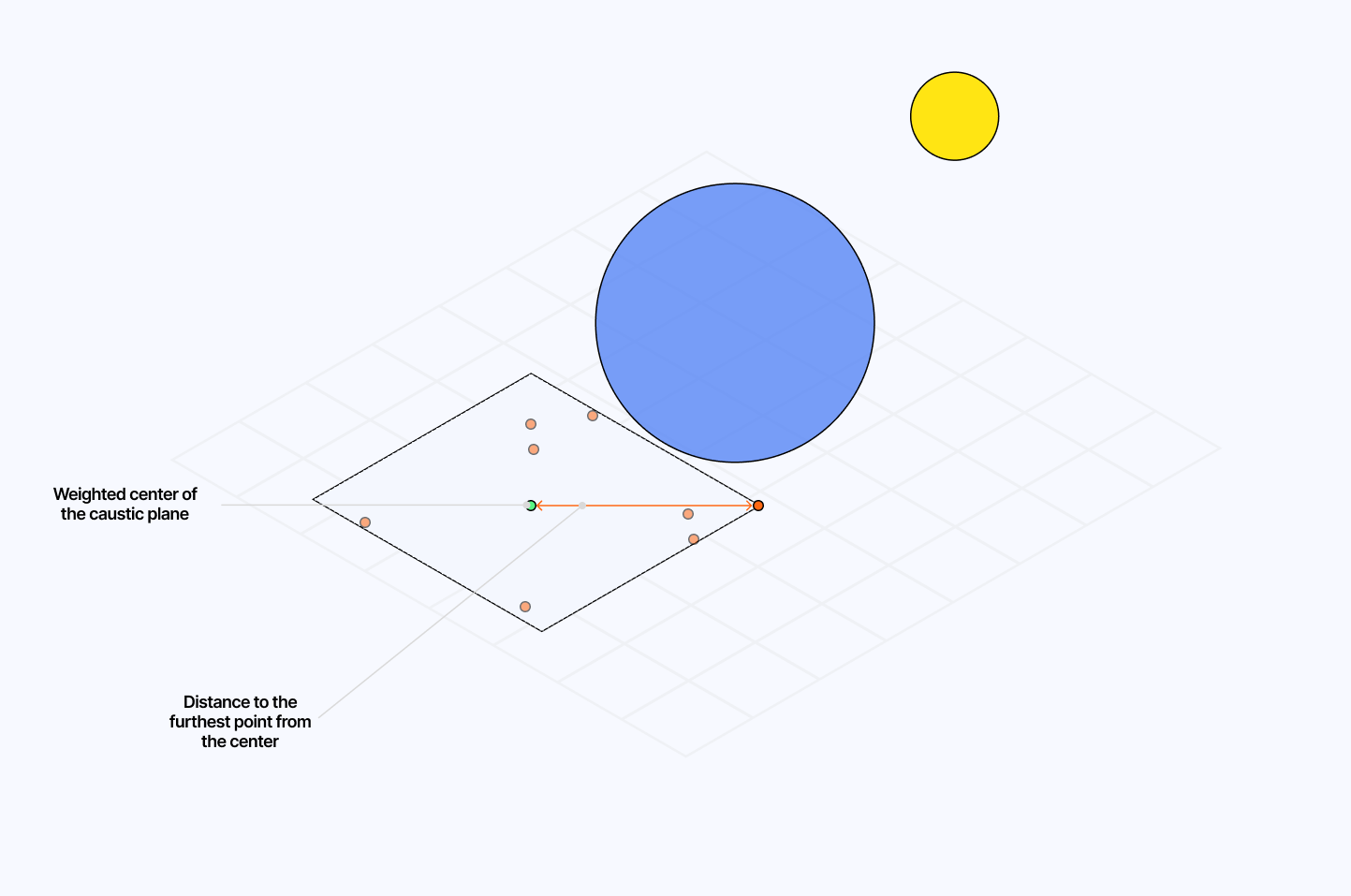

Fitting our caustic pattern inside the plane

Now comes the last step of this tedious process: we need to scale our plane so that no matter the position of the light, the resulting caustic pattern always fits in it.

That is tricky, and to be honest the solution I'm about to give you doesn't work 100% of the time, but it covers most of the use cases I encountered, although I could sometimes notice the pattern being subtly cut by the bounds of the plane.

My train of thought to solve this went as follows:

- We have the projected vertices.

- We got the center position from those vertices.

- Hence, we can assume that the safest scale of the plane, the largest that could for sure fit our caustics, should be the distance from the center to the furthest projected vertices.

Which can be implemented in code using the Euclidean distance formula:

Calculating the safest scale for our plane to fit the caustic pattern

1const scale = newVertices2.map((p) =>3Math.sqrt(Math.pow(p.x - centerPos.x, 2), Math.pow(p.z - centerPos.z, 2))4)5.reduce((a, b) => Math.max(a, b), 0);67// The scale of the plane is multiplied by this correction factor to8// avoid the caustics pattern to be cut / overflow the bounds of the plane9// my normal projection or my math must be a bit off, so I'm trying to be very conservative here10const scaleCorrection = 1.75;1112causticsPlane.current.scale.set(13scale * scaleCorrection,14scale * scaleCorrection,15scale * scaleCorrection16);

If we put all this together within our useFrame hook on top of what we've built in the previous part, we finally obtain the long-awaited adjustable caustic pattern ✨.

Our caustic pattern looks gorgeous and behaves as expected as we move the light source around the target mesh! I hope this was worth the trouble so far because there's yet one last thing to explore to make this effect even better...

I would lie to you if I said I wasn't happy with the result above. However, there was still something I wanted to try, and that was to see if the Caustic effect we just built could also handle a moving/displaced mesh and thus feel more dynamic.

On top of that, our effect only really works on shapes that are either very complex or have a lot of intricate, rounded corners, limiting the pool of meshes we can use for a great looking light pattern.

Thus, I had the idea to add a bit of displacement to those meshes to increase their complexity and hope for a better caustic effect. When adding displacement to the vertices of a mesh in a vertex shader, there's one tiny aspect I had overlooked until now: the normals are not recomputed based on the displacement of the vertices out of the box. Thus, if we were to take our target mesh and add some noise to displace its vertices, the resulting Caustic effect would unfortunately remain unchanged.

To solve that, we need to recompute our normals on the fly based on the displacement we apply to the vertices of our mesh in our vertex shader. Luckily, the question of "how to do this" has already been answered by Marco Fugaro from the Three.js community!

I decided to try his method alongside a classic Perlin 3D noise. We can add the desired displacement and the Normal recomputation code to the vertex shader of our original Normal material we introduced in the first part.

Updated Normal material fragment shader

1uniform float uFrequency;2uniform float uAmplitude;3uniform float time;45// cnoise definition ...67vec3 orthogonal(vec3 v) {8return normalize(abs(v.x) > abs(v.z) ? vec3(-v.y, v.x, 0.0)9: vec3(0.0, -v.z, v.y));10}1112float displace(vec3 point) {13if(uDisplace) {14return cnoise(point * uFrequency + vec3(time)) * uAmplitude;15}16return 0.0;17}1819void main() {20vUv = uv;2122vec3 displacedPosition = position + normal * displace(position);23vec4 modelPosition = modelMatrix * vec4(displacedPosition, 1.0);2425vec4 viewPosition = viewMatrix * modelPosition;26vec4 projectedPosition = projectionMatrix * viewPosition;2728gl_Position = projectedPosition;2930float offset = 4.0/256.0;31vec3 tangent = orthogonal(normal);32vec3 bitangent = normalize(cross(normal, tangent));33vec3 neighbour1 = position + tangent * offset;34vec3 neighbour2 = position + bitangent * offset;35vec3 displacedNeighbour1 = neighbour1 + normal * displace(neighbour1);36vec3 displacedNeighbour2 = neighbour2 + normal * displace(neighbour2);3738vec3 displacedTangent = displacedNeighbour1 - displacedPosition;39vec3 displacedBitangent = displacedNeighbour2 - displacedPosition;4041vec3 displacedNormal = normalize(cross(displacedTangent, displacedBitangent));4243vNormal = displacedNormal * normalMatrix;44}

Since a time component is required for the noise to move, we need to ensure:

- To add a

timecomponent to our Normal material. That will influence the entire pipeline we built in the previous parts, down to the final caustic effect. - To add a

timecomponent and displacement to the original material. Otherwise, it wouldn't make sense that a static mesh would create moving caustics. (see final example)

Wiring up the target mesh's material and normal material with time, amplitude and frequency to enable dynamic caustics

1//...23mesh.current.material = normalMaterial;4mesh.current.material.side = THREE.BackSide;56mesh.current.material.uniforms.time.value = clock.elapsedTime;7mesh.current.material.uniforms.uDisplace.value = displace;8mesh.current.material.uniforms.uAmplitude.value = amplitude;9mesh.current.material.uniforms.uFrequency.value = frequency;1011gl.setRenderTarget(normalRenderTarget);12gl.render(mesh.current, normalCamera);1314mesh.current.material = originalMaterial;15mesh.current.material.uniforms.time.value = clock.elapsedTime;16mesh.current.material.uniforms.uDisplace.value = displace;17mesh.current.material.uniforms.uAmplitude.value = amplitude;18mesh.current.material.uniforms.uFrequency.value = frequency;1920//...

We now have wired together all the parts necessary to handle dynamic caustics! Let's take some time to make a beautiful scene with some staging by adding a Spotlight from @react-three/drei and a ground plane that can bounce some light for more realism 🤌 and voilà! We have the perfect scene to showcase our beautiful moving caustics ✨.

Whether you want them subtle, shiny, or colorful, you now know everything about what's behind caustics in WebGL! Or at least, one way to do it! What we saw is obviously one of many possible solutions to building such an effect for the web, and with the advent of WebGPU, I'm hopeful that we'll see more ways to showcase complex light effects like this one with higher quality/physical accuracy and without sacrificing performance. You can already see glimpses of this in one of @active_theory's latest work.

There are a further improvements I had in mind to make the result of this effect look even better, such as getting a texture of the front side and back side normals of the target mesh to take into account both faces when computing the caustic intensity and potentially a more elegant/performant way to do chromatic aberration that is less resource hungry and provides better output.

I'm happy with the caustics I built, although it doesn't seem to result in a beautiful effect for every mesh and I had to resort to last-minute tweaks to fix issues that are most likely due to limitations in my implementation, bad choices in my render pipeline, or simply erroneous math. If you find obvious mistakes: please let me know, and let's work together to fix them! In the meantime, if you wish to have caustics running on your own project, I can't recommend @react-three/drei's own Caustics component enough, which is far more production-grade than the implementation I went through here and will most likely cater to your project much better than this.

I hope this article can spark some creativity in your shader/React Three Fiber work and make the process of building effects or materials you have in mind from scratch less daunting 🙂.